Polarization and Social Media

The Dark Nature of the Information Economy

With the rise of the internet came the whispered promise of a vastly superior digital means of disseminating information – the world would awaken to find itself encircled by an intricate web through which news would flow like a torrent, washing over all of humanity and cleansing us of our ignorance. Next, social media platforms came online, and this time the promise had more to do with our social networks. We were told this new technology would bring us together like never before, and although there are resounding benefits, such as empowering social movements or nurturing relationships from afar, there is also a dark side.[1]

Recently, belief in the potential use of the drug hydroxychloroquine as a treatment for Covid-19 has become increasingly polarized.[2] Polarization can be characterized as a situation in which groups within a society come to hold stable, mutually exclusive beliefs even when these groups can communicate and interact with one another. Curiously, it seems that whether or not one thinks hydroxychloroquine will be effective against Covid-19 rests strongly on one’s political persuasion – a radical politicization of truth. How have we arrived at this moment amidst a crisis of fake news, viral misinformation, and such extremes of polarized beliefs?

Much of a healthy democracy rests upon not a shared set of beliefs but upon a shared set of information. If all I see is news about the impacts of Apollo 11’s successful moon landing, and all you see is news about how the NASA moon landings are a hoax, it is exceedingly unlikely either of us will be able to judge each other’s evidence as credible given that we both have no exposure to that portion of evidence available in the world. Of course, we are exposed to the other side of the story by interacting with each other, but what if that encounter is removed, leaving us each with only our own isolated beliefs? This state has increasingly become the norm, and it exemplifies the essence of what Eli Pariser has called a filter bubble.[3]

What is a filter bubble and how is it created?

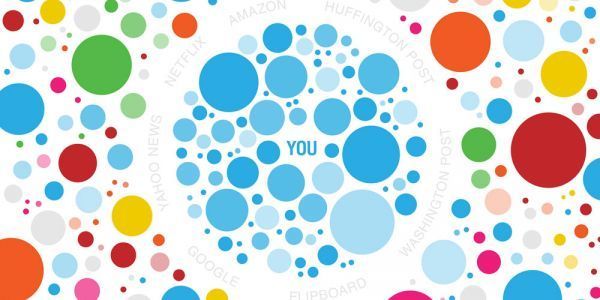

A filter bubble is the informational ecosystem that we each inhabit individually, where the boundary of the bubble acts as a filtering device, procuring for us only personally relevant information. At first glance, the aims of the filter bubble would seem to be reasonable and even desirable. If a parent of a young child searches for “dragons” on Google, and the first results contain links to the How to Train Your Dragon book series, this is actually helpful (assuming the parent is perusing for chapter books for beginners). However, there is a flipside to these filters.

If filter bubbles are informational ecosystems, algorithms are the engineers responsible for their construction. These filtering algorithms are increasingly complex - so complex in fact that even their designers may have no knowledge of how they are operating. For simplicity’s sake we can think of them as basic mechanisms which analyze data and then use this data to generate predictions. To clarify, the data upon which the algorithms feed is our personal data, and the predictions generated concern our behaviors and beliefs – what are we likely to do or think? This system is powered by strong economic incentives, what some call “surveillance capitalism”.[4]

There is a saying which goes, “If you’re not paying for it, you’re not the customer; you’re the product being sold”. This aphorism points to the hidden economy operating beneath the surface on search engines like Google, platforms like Facebook, or online retailers like Amazon. Each of these tech companies generates substantial annual revenue by selling your data to advertisers. This occurs partly through targeted ads, which seem to follow you across various websites. For example, if you’ve just perused for some new shoes at a retailer’s site without making a purchase, you may find a floating ad for that same pair of shoes greeting you when you then check the weather forecast at your favorite site. Even if you haven’t recently searched for a potential purchase, there are data brokers (like Acxiom) which collect personal data on each of us, with some of these databases containing up to 1,500 data points per person – details like what medications you use or whether you are right- or left-handed.[5]

This economic model depends upon capturing our attention and keeping us engaged and clicking online. Tristan Harris, a founder of the Center for Humane Technology, calls this the attention economy, and he points out that tech companies are incentivized to discover what is most relevant to each of us and to employ these filter algorithms to find and return this content to us on whatever platform we’re utilizing. There is even an addictive quality to consuming social media that operates in much the same way that our brains respond to drugs such as tobacco, alcohol, and heroin[6], and resulting negative side effects such as poor physical health and depression may be connected.[7] The question then becomes one of individual autonomy – are we really free to choose what how we want to spend our online time? One concern is that if our information diets are chosen based on what we crave rather than what is good for us, we may experience a stark misalignment between the narrow slice of information curated for us and the entire spectrum of information in the wild.

The Fracturing of Shared Reality

One of the ways in which data companies learn more about who you are is through click signals – digital indicators of what types of content you prefer. If you are a political conservative, it’s likely that you will tend to engage with content that supports your side of the aisle, and the same holds true if you are liberally-minded. In a TED talk, Eli Pariser recounts his experience with Facebook’s homogenization of his news feed, realizing that all his conservative friends had vanished from view.[8] No longer could he see what the other side was thinking – Facebook’s algorithm had decided these alternative viewpoints were not relevant.

One of the biggest concerns around the effects of personalized content is that our thoughts and behaviors may be susceptible to nudges, small suggestions which influence us. There is some evidence that Facebook, for example, can successfully manipulated users’ emotional states by curating either a “positive” or “negative” news feed for users, producing what has been called “massive-scale [emotional] contagion via social networks”.[9]

If our moods influence our behavior and the digital content we are exposed to can influence our moods, then our behavior can be manipulated. Through personalized content gathered from the best intentions or the worst, we are susceptible to being nudged. The line between persuasion and control is a blurry one.

REFERENCES

[1]Lenhart, A., Smith, A., Anderson, M., Duggan, M., & Perrin, A. (2015). Teens, technology & friendship (Vol. 10). Pew Research Center. https://www.pewresearch.org/internet/2015/08/06/teens-technology-and-friendships/

[2] O’Connor, C. & Weatherall, J. O. (2020, May 4). Hydroxychloroquine and the political polarization of science: how a drug became an object lesson in political tribalism. Boston Review. https://bostonreview.net/science-nature-politics/cailin-oconnor-james-owen-weatherall-hydroxychloroquine-and-political

[3] Pariser, E. (2011a). The filter bubble: what the internet is hiding from you. Viking Press.

[4] Zuboff, S. (2019). The Age of Surveillance Capitalism: The Fight for a Human Future at the New Frontier of Power. New York: Hachette Book Group.

[5] Pariser, E. (2011a). The filter bubble: what the internet is hiding from you. Viking Press; 43-44

[6]Berridge, K. C. (2007). The debate over dopamine’s role in reward: the case for incentive salience. Psychopharmacology, 191(3), 391-431

[7] Keles, B., McCrae, N., & Grealish, A. (2020). A systematic review: the influence of social media on depression, anxiety and psychological distress in adolescents. International journal of adolescence and youth, 25(1), 79-93.

[8]Pariser, E. (2011b, May). Beware online filter bubbles [Video]. TED Conferences. https://www.ted.com/talks/eli_pariser_beware_online_filter_bubbles

[9]Kramer, A. D., Guillory, J. E., & Hancock, J. T. (2014). Experimental evidence of massive-scale emotional contagion through social networks. Proceedings of the national academy of sciences, 111(24), 8788-8790. See also: Tufekci, Z. (2015). Algorithmic harms beyond Facebook and Google: emergent challenges of computational agency. Colorado technical law journal, 13(203), 203-16.